Dear Friends,

For three of the past five years, we have spent January in Oaxaca. From the beginning, something told us to move here. Here’s what I journaled in January 2019:

Around 11, I went for a quick run along the river, where there is a wonderful dirt trail along each side that makes for an ideal 5-mile run. I became convinced that we should move here in 2024 after my term at Hewlett comes to an end.

It wasn’t the first time during a vacation that I wondered, “What if we moved here?” But something about Oaxaca was different. It still is. Five years later, we are making it our home, moving past the fantasizing, persisting through the bureaucracy, finding new routines, and where to buy peanut butter without added sugar. 🙄 It feels real. It feels right.

We’re about to take off for a 10-day trip to Colombia, where our dear friends

and Tony are getting married. It will be my first time in Cartagena — if you have recommendations, please do share. Then we’ll spend five days in Medellín catching up with some dear friends from when I lived there in 2007 and 2008, and the city was emerging from a two-decade stretch as Latin America’s most violent and dangerous city. Today, it has become one of Latin America’s most popular cities for tourists and digital nomads, prompting all the usual Airbnb tensions that have played out in Lisbon, Barcelona, and Prague.For this week, as AI moves from chatbots onto our cell phones, some thoughts about the definition of intelligence, labor, and what makes humans unique from computers:

Last year, we shrugged past an improbable civilizational milestone, the Turing test. And so I wanted to deposit a note into the time capsule: What does it mean in 2023 for a human to be intelligent? What does it mean to be conscious? And how can we prove that human intelligence and consciousness are unique from computers?

I first learned about Alan Turing and his proposed “imitation game” in an introductory cognitive science class in college.1 It was 2002, and Apple had just released the first iPod and their new operating system, OS X.

I purchased a new laptop, downloaded every mp3 I could find on LimeWire, and started to enjoy studying for the first time. The more I understood about computers, the more I wanted to learn. And the more I learned about the mysteries of the brain, the more I wanted to research.2

I couldn’t stop thinking about the test that Alan Turing first proposed in 1950 to “assess a machine's ability to exhibit intelligent behavior indistinguishable from a human.”3 My new laptop was great at downloading music, spell-checking my college essays, and learning how to code, but could a future version truly become indistinguishable from human responses within my lifetime? It didn’t seem likely.4

And then last year, it happened.5 We mostly shrugged. A few pieces of commentary focused on the need for a new, better test: “Researchers need new ways to distinguish artificial intelligence from the natural kind,” Gary Marcus wrote in Scientific American. And in Popular Mechanics: “The Turing Test for AI Is Far Beyond Obsolete.”

But I was still drawn to the fundamental questions from college: What is intelligence, anyway? What is consciousness? And how can humans prove our intelligence and consciousness to other humans (especially if our dependence on computers corrodes our social skills, homogenizes culture, and makes us more predictable)?6

I was captivated by

’s thought-provoking short story, The Consciousness Box, in which a human wakes up in a metal cube and must convince a remote examiner of his consciousness to be released. It inspired me to spend a few days reading the latest proposals to demonstrate human uniqueness from computers. In 2017, Gary Marcus suggested four new tests to distinguish human intelligence from computers, but humans are already lagging in all four areas. What about self-awareness? Is there an empirical test for self-awareness or consciousness that humans would pass, but computers could not? It doesn’t seem likely.7I still associate intelligence with reasoning, pattern detection, and standardized tests, even if I should know better:8

But I prefer Paul Bloom’s definition of intelligence: “The capacity to achieve one's goals across a range of contexts.”9 After all, what’s the use of mastering calculus or learning a new language, if you don’t put it toward some goal?10 The Turing test was based on deception, and we have been deceived. With Bloom’s definition in mind, Mustafa Suleyman’s proposed replacement for the Turing Test makes more sense: “Instruct an AI agent to make $1 million on Amazon in a few months with just a $100,000 investment.”11 In this case, intelligence isn’t the performance on a standardized test; it’s the ability to achieve a goal.12

This line of thinking poses some useful questions for a middle-aged guy on a year-long sabbatical facing a career change (that’s me 🙋♂️): What goals are humans better at achieving? What goals are better suited for computers? How should we work together? And how will our goals evolve over the next 20 years as AI converges with 3D printing, robotics, genetic engineering, and quantum computing? Most importantly, what are my goals in the first place?

Perhaps what makes us uniquely human — developing authentic goals — is also life’s most daunting challenge. What do we truly want?13 For ourselves? Not what our parents, society, or even younger versions of ourselves expected — but what is it that we genuinely want to achieve in this moment, and why?14

That is my work for the next 11 months. What is my purpose and worth in a world where so many of the skills and knowledge I acquired in the first half of my life will be displaced by machines during the second half? What do I want to work on as a human, and what shall I delegate to the machines? (

has a thought-provoking reflection on the likelihood of spending years of work on a project made irrelevant by the forward march of AI.) I have a few ideas to deposit in the time capsule over the next weeks and months.What about you?

What about you? Has AI nudged you to consider changing projects, careers, hobbies, or what you want to learn? I’d love to hear about it — either in the comments below or by replying to the email. And thanks as always for reading. 🫶

David

Initially, I majored in philosophy, thinking it held the secrets to a meaningful life. After transferring to UCSD, I was enamored by the catalog description of cognitive science: “What is cognition? How do people, animals, or computers ‘think,’ act, and learn? To understand the mind/brain, cognitive science brings together methods and discoveries from neuroscience, psychology, linguistics, philosophy, and computer science.” Sometimes I wonder where my life would have led if I had persevered with the coursework.

I was especially intrigued by VS Ramachandran’s research on synesthesia and perception as windows into language and consciousness.

In 1999, Ray Kurzweil predicted that we’d surpass the Turing Test by 2029. In 2002, he turned his prediction into a $20,000 bet with Mitch Kapor. It’s interesting to read through the terms of the bet and how the comments have evolved over the past twenty years.

A 2001 paper about “The Status and Future of the Turing Test” notes that Turing himself didn’t like to make predictions, but did write in 1950 that “at the end of the century the use of words and general educated opinion will have altered so much that one will be able to speak of machines thinking without expecting to be contradicted.” He was close.

I’m side-stepping the academic debate about whether computers passed Turing’s imitation game in 2009, 2022, 2023, or still not yet.

In You Are Not a Gadget, Jaron Lanier writes: “You can’t tell if a machine has gotten smarter or if you’ve just lowered your own standards of intelligence to such a degree that the machine seems smart. If you can have a conversation with a simulated person presented by an AI program, can you tell how far you’ve let your sense of personhood degrade in order to make the illusion work for you?” Similarly, in response to an Kafakaesque post office scandal in the UK, Hugo Rifkind writes, “The more we automate, the fewer people there will be who understand whatever we are automating, what numbers go in and what come out, and exactly what happens to them in between.”

I can’t get enough of

’s Substack on consciousness research. He writes:To this day, neuroscience has no well-accepted theory of consciousness—what it is, how it works. While there is a small subfield of neuroscience engaged in what’s called the “search for the neural correlates of consciousness,” it took the efforts of many brave scholars, spear-headed by heavy-weights like two Nobel-Prize winners, Francis Crick and Gerald Edelman, to establish its marginal credibility. Previously, talk of consciousness had been effectively banished from science for decades (I’ve called this time the “consciousness winter” and shown that even the word “consciousness” declined in usage across culture as a whole during its reign).

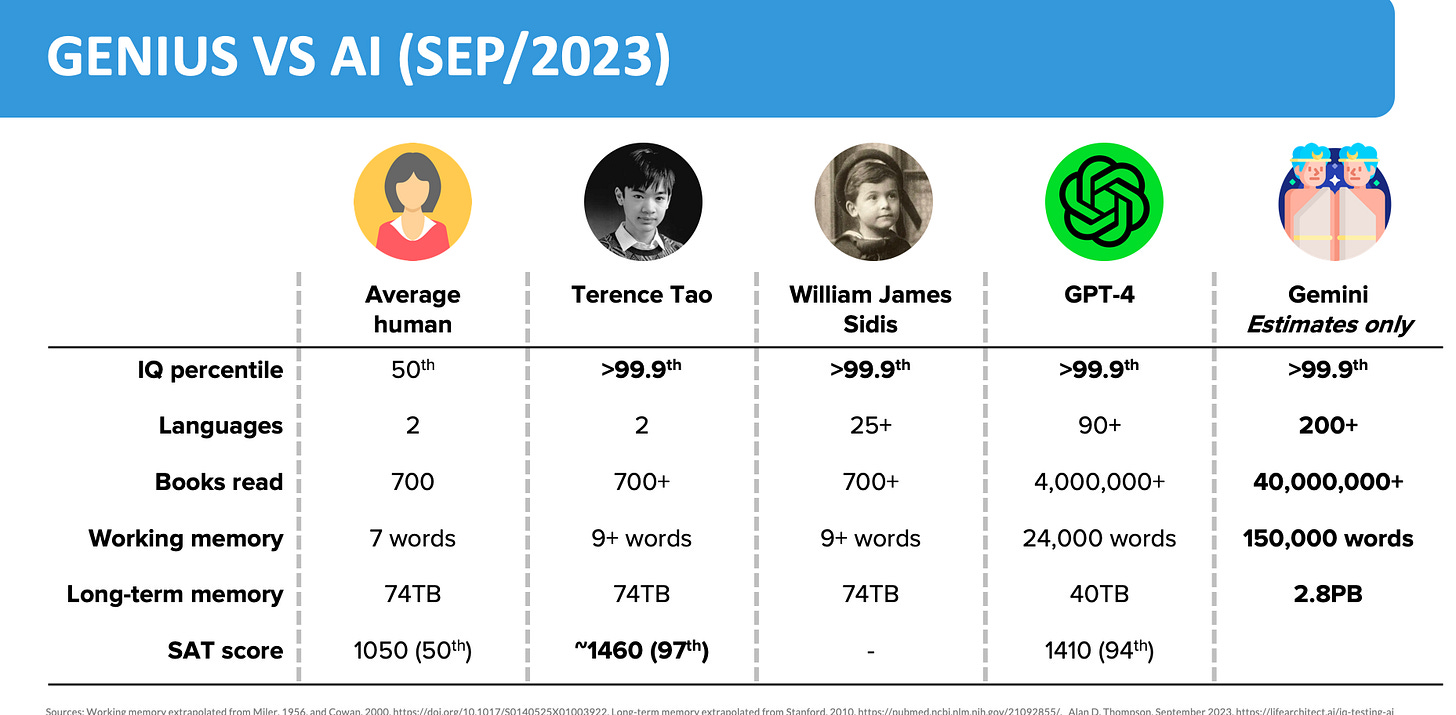

Already, Chat GPT-4 tests in the 99.9th percentile on IQ tests, which led the Chairman of Mensa International's Gifted Families program to resign and start presenting about “The New Irrelevance of Intelligence.” IQ and SAT tests assess the two types of intelligence our culture has valued most: linguistic and logical intelligence. Howard Gardner proposes five other types of under-appreciated intelligences: visual-spatial, body-kinesthetic, musical, interpersonal (social), and intrapersonal (self-awareness). My hunch is that these other intelligences will become more valuable over the next decade as AI eclipses linguistic and logical intelligence. But 20 years out, I expect that AI will outperform humans on all the other intelligences too.

Here’s a link to the moment in a podcast where Bloom shares his definition and then goes on to discuss whether humans have a unique capacity for moral reasoning —the subject of his recent piece in the New Yorker, How Moral Can A.I. Really Be? (Spoiler: Don’t we want machines to be more ethical than humans?)

Maybe your goal is just the enjoyment of solving calculus equations or the pleasure of reading a book in its original language, but it’s still a goal.

In his book, The Coming Wave, Suleyman expects an AI platform to pass this test in 3-5 years, which would completely upend e-commerce. It’s a great book that has left me scratching my chin in intrigue and horror. From Ars Technica’s reporting, it seems like we’re already getting close to passing the AI merchant test on Amazon.

Regardless of the goal’s morality.

Or maybe it will turn out not to be so uniquely human after all. Maybe future generations will all depend on AI life coaches to identify their goals. 🤳🔮🤷♂️

I thought Chris Nolan’s Oppenheimer was about an intelligent man who was gifted at achieving others’ goals but struggled to identify his own. (I have written before about the kind of resourceful people who get a great deal done without considering why, and the contemplative ones who consider every last detail before getting started.)

Good provocations for a Monday morning. If I’m honest, this question just makes me mad. “And how can we prove that human intelligence and consciousness are unique from computers?” I don’t want to prove it, but I guess a future world of Terminators that look like us and say things like us and maybe mock bleed like us means we need to refine our pattern assessments. I actually failed the NYT AI vs real photo test and realized I had no framework or tools to really understand what was fake or real. I think that’s what you’re getting at.

I feel like AI right now is making me double down on goals where commodification isn’t the goal. So taking photos of a shadow. Or a strip mall. AI is going to supplant stock photography and probably optimize for pseudo real shots of “young person looking at half dome.” But AI is built on scale. It won’t scale untold stories. Or that’s my hope. Once it does, I guess we will be screwed because at that point, culture will be outsourced.

Love this question. Have you read James Bridle's Ways of Being yet? The book goes into the nature of intelligence and explores it from three angles: human, natural, and artificial. Worth checking it out!